parameters <- list(

# ~ Model settings ------------------------------------------------------

settings = list(

state_names = state_names, #state names

Dr_C = input$Dr_C, #Discount rate: costs

Dr_Ly = input$Dr_Ly, #Discount rate: life years

THorizon = input$THorizon, #Time horizon

state_BL_dist = c(1, 0, 0, 0), #starting distribution of states

combo_dur = 2 #number of states with combination therapy

),

# ~ Transition probability matrices (TPMs) ------------------------------

transitions = list(

TPM_mono = TPM$mono, #Control arm transition probability matrix

TPM_combo = TPM$combo #Intervention arm transition probability matrix

),

# ~ Costs ---------------------------------------------------------------

Costs = list(

DM_CC_costs = data.frame(

unlist(DM_CC_costs$state_A),

unlist(DM_CC_costs$state_B),

unlist(DM_CC_costs$state_C),

unlist(DM_CC_costs$state_D)

),

drug_costs = drug_costs,

state_costs = state_costs

)

)14 R and shiny in HTA

Rose Hart

Lumanity, Sheffield, UK

Darren Burns

Delta Hat, Nottingham, UK

Mark Strong

University of Sheffield, UK

Andrea Berardi

PRECISIONheor, UK

Dawn Lee

University of Exeter, UK

14.1 Introduction to the shiny package

14.1.1 What is shiny and how can it help me make my models accessible?

shiny is an R package that makes it easy to build interactive web applications within R (Chang et al., 2021). shiny provides a wrapper around your existing functional R code, allowing you to host stand-alone applications on a web page, embed them in rmarkdown or quarto documents and build dashboards. This is helpful if the model target audience is unfamiliar with R, as the application can be used entirely within a web browser and without the user being exposed to any R code (or even having R installed on their local machine!). The functionality available within the shiny package alone is considerable, and the package undergoes regular updates. CSS themes, html, and JavaScript can also be used to further develop the graphics and interactive capabilities of your shiny application.

The optimal modeling platform is one that is fast, transparent, and flexible to required changes in modeling assumptions or supporting data sets, while remaining user friendly (Hart et al., 2020). R alone fulfills the majority of these requirements. However, as a script-based language, R can seem daunting to newer users. shiny is increasingly used by health economic modelers as it allows the developer to make economic models accessible to all types of user (Chang et al., 2021).

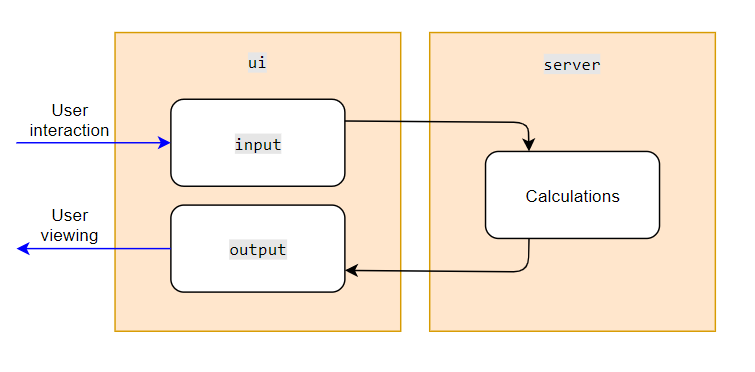

A model built with shiny comprises two parts: a user interface (ui) and a server. The ui forms the basis of how the user interacts with the model and is designed to be user friendly. The server operates as the engine.

A shiny application allows the user to amend inputs in a similar manner to Excel, without needing to access the background R code. In this sense, an R-based model using shiny is comparable to an Excel-based model developed in part using Visual Basic for Applications (VBA) code, but with the capacity for complex statistical analysis, model flexibility and high-quality visuals.

Of course, a shiny interface is not sufficient for a reviewer to understand the underlying mechanisms of a model. Nevertheless, the use of shiny can serve as a bridge between complex functionality and model accessibility, making it easier to directly communicate the results of a model to decisions makers and other stakeholders. (Smith and Schneider, 2020)

14.1.2 What types of models could I use shiny for?

This section describes four types of economic model for which a shiny interface could be used to aid accessibility. These have been chosen to illustrate the advantages of being able to consolidate and calculate in real time the underlying statistics informing health economic decision problems, and of providing the user with an intuitive means of interacting with the data – features that have the potential to improve both the quality of decision making within the pharmacoeconomic space and the efficiency with which decisions are made. There are, of course, plenty of other types of model where this is the case.

We provide basic information on the build process and requirements of these economic models. Some of the examples noted here will be expanded on later within this chapter. It is clear to see that use of R in combination with shiny has the potential to provide significant benefits in health economics and outcomes research (HEOR).

Early modelling and feasibility assessment

Model objective

- Assess feasibility of HTA success

- Understand economically justifiable price

- Identify data gaps and key uncertainties

How can R/shiny help?

- Increase scalability and consistency

- Incorporation of multiple indications and countries

- Integrated end-to-end analysis means fast updates to the model as soon as trial data become available

- Reduce errors and increase efficiency

- No copy-and-pasting

- Export outcomes to

PDF,Word, andPowerPoint - Version control capabilities using

Gitallowing parallel working and tracked changes - User-friendly front-end

- Improved graphics for presentation

HTA models

Model objective

- Demonstrate the economic value of a product to HTA decision makers

How can R/shiny help?

- Increase scalability, flexibility, and consistency

- Incorporation of multiple indications, data cuts, countries, and modelling strategies

- Reduce errors and increase efficiency

- No copy-and-pasting

- Export outcomes to PDF, Word, and PowerPoint

- Version control capabilities using

Gitallowing parallel working and tracked changes - Increase speed: probabilistic sensitivity analysis and one-way sensitivity analysis are approximately nine times faster (Hart et al., 2020)

Examples

intRface™(Hart et al., 2020)- IVI open-source value tools (Jansen et al., 2019)

- Four-state Markov tutorial (Smith and Schneider, 2020)

Eliciting inputs/informing parameters

Model objective

- Conduct and present analyses

- Allow experts to provide informed judgments

How can R/shiny help?

- Increase scalability and flexibility

- Incorporation of multiple data sets and analysis strategies

- View the end outputs of analyses live during expert engagement

- Reduce errors and increase efficiency

- A continuous, end-to-end process is possible, from input of results or analyses through to collation and reporting

Examples

BCEAweb(Baio et al., 2017)SAVI(Strong et al., 2014a)PriMERSHELF(Strong et al., 2014b)- FDA drug safety reporting ratios (

https://openfda.shinyapps.io/RR_D)

Direct-to-payer/value tools

Model objective

- Demonstrate the economic value of a product direct to payers

How can R/shiny help?

- Increase scalability and consistency

- Incorporation of multiple indications and countries

- Improved graphics for presentation

- View the end outputs of analyses live during payer engagement

- Automatically export outcomes to generate payer leave pieces

- Ability to make available online as an open-source model for payers to use

Examples

- IVI open-source value tools (Jansen et al., 2019)

- Immunology treatment sequence value tool (Hart et al., 2024)

14.1.3 How can I learn more?

A tutorial covering the basics of how to build an application using shiny is available from the Rstudio website (Chang et al., 2021). It includes a set of exercises and takes approximately half a day to complete. We would recommend investing the time to go through this tutorial before attempting to add a user interface to an R-based economic model.

The next sections provide:

- Section 14.2: Additional detail regarding examples of shiny applications in health economics

- Section 14.4: Guidance on the practicalities of building a shiny application including

- Health technology assessment (HTA) requirements

- Coding for transparency

- Quality control (QC)

- Deployment

- Workflow and version control

In addition, the online version of this book (available at the website https://gianluca.statistica.it/books/online/rhta) also provides a step-by-step guide to building an application using shiny to wrap around a HTA model.

14.2 Examples of shiny applications in health economics

The Rstudio Shiny gallery provides many examples of different shiny applications, which can be used for inspiration. There are also numerous examples of shiny applications within HEOR. Table 14.1 provides a non-exhaustive list of publicly available HEOR applications built using shiny. Each of these applications serves the purpose of adding an interface onto a process that was written in R, thereby improving accessibility to non-R users and enabling online publication for worldwide distribution. Most the applications described in Table 14.1 and in the Rstudio gallery are hosted using the shinyapps.io website, which links with the Rstudio environment of the programmer and allows easy publication of applications online.

R with shiny could be advantageous

| Application | Authors / group | Model access and summary |

|---|---|---|

intRface™ |

BresMed Health Solutions Ltd (Hart et al., 2020) | This model (https://shorturl.at/apzK6) is part of an explicit comparison between shiny and Excel for modeling a hypothetical decision problem of a non-specified CAR T-cell therapy in B-cell acute lymphoblastic leukaemia (Hart et al., 2020) The purpose of this model is to showcase the potential of using shiny applications for complex modelling, and includes analyses such as propensity score matching and mixture cure modelling alongside standard partitioned survival (Chapter 7) and state transition modelling approaches (Chapter 11). |

IVI |

Innovation and Value Initiative (IVI, Jansen et al., 2019) | IVI has built three models using shiny; the first is for rheumatoid arthritis (https://shorturl.at/cvxS6), the second is for non-small cell lung carcinoma (https://shorturl.at/bdtKO) and the third is for major depressive disorderusing publicly available efficacy data (https://shorturl.at/qwzC5). |

Tutorial using the Sick-Sicker model |

Smith and Schneider (2020) | This application provides an extensive tutorial for the application of a shiny front-end to the Sick-Sicker model developed by DARTH (https://shorturl.at/cuzL8) |

QALY shortfall calculator |

University of Sheffield, University of York and Lumanity (McNamara et al., 2023) | This application is referenced in NICE (2022) and can be used to calculate quality adjusted life expectancy and proportional and absolute shortfall in order to calculate whether or not severity modifers are relevant (https://shiny.york.ac.uk/shortfall) |

SAVI |

University of Sheffield School of Health and Related Research (Strong et al., 2015) | The Sheffield Accelerated Value of Information (SAVI; http://savi.shef.ac.uk/SAVI) model takes probabilistic sensitivity analysis from the user and allows the user to generate i) standardized assessment of uncertainty; ii) overall EVPI per patient, per jurisdiction per year and over the decision relevance horizon; and iii) EVPPI for single and groups of parameters (see Section 1.8). |

BCEAweb |

University College London (Baio et al., 2017) | Part of the BCEA package. On loading the package, it can be accessed by running BCEAweb() and it will deploy locally. Provides an interface to the BCEA package, which is designed to produce health economic evaluations using user-inputted results from a large number of model simulations for all the relevant model parameters. The model uses this input to present the economic and uncertainty analysis results alongside a value of information analysis (Section 1.8). Can be accessed also at https://egon.stats.ucl.ac.uk/projects/BCEAweb |

GH CEA Registry |

Center for Evaluation of Value and Risk in Health (2019) | The Global Health Cost-Effectiveness Analysis (GH CEA) Registry is a database that compiles articles utilizing the cost per disability-adjusted life year metric to measure the efficacy of health interventions. The database is presented in a shiny application (http://ghcearegistry.org/ghcearegistry) |

PriMER |

University of Washington (The Comparative Health Outcomes Policy and Economics Institute, CHOICE, 2020) | The PriMER tool predicts the diffusion of personalized medicine technologies, accounting for the disease, associated test and population. The included calculations are based on a series of preference studies conducted with patients, providers and healthcare payers (https://uwchoice.shinyapps.io/primer) |

14.3 Building a shiny application

14.3.1 Components of a shiny application

As introduced above, applications built using shiny usually consist of two R objects: ui and server. The R command shinyApp() requires both a ui object and a server object in order to generate the application (RStudio, 2017). The alternative command, runApp(), can be used if the scripts generating the ui and server objects are separate, as we would recommend (RunshinyAppRStudio?).

The user interface (ui) list

The ui list contains instructions for the layout of the application, as well as interactive components.ui objects are very flexible; they are suitable for simple presentations of calculations and results as well as complicated interactive inter-dependencies. Due to the web design basis of the shiny functions, the look and feel of the ui can be manipulated using HTML and CSS, as with any standard web page.

The shiny application structure allows several different approaches to programming the ui. For instance, the ui can be broken down into several different files to avoid lengthy blocks of code. Alternatively, ui elements can be generated inside of the server scripts, which allows additional flexibility or interactivity.

The server list

A server object is defined as an R function. This R function usually has three arguments:

inputoutputsession

input and output are lists. The input list contains the input information from the UI at any given time, while output contains the outputs being sent back into the UI after any calculations have taken place. Both the input and output lists exist at the same time and are also interdependent. This means that while a shiny application is running, a constant feedback loop is in place; the server reacts to the individual arguments in the input list by executing functions that are related to any of the inputs when they are detected to have changed. This process then creates outputs that return to the UI. This is closely analogous to a Microsoft Excel workbook when calculations are set to ‘Automatic’.

As computational feedback loops can easily occur in this context, there are several mechanisms available to monitor and manage computation in shiny applications. Within shiny, this is referred to as reactivity. There are many functions that can be used to control the reactivity in the model. For example:

reactive(): Arguments that are wrapped inside of areactive()function will recalculate given any stimulus, i.e. any change in inputseventReactive(): These arguments will recalculate only upon an event, which is specified by the programmer (e.g. pressing a button or switch)

There are many other functions that control what happens when in a model aside from the two that are given as examples here, each with a particular purpose and level of reactivity to the shiny application environment. This facilitates flexibility and control when designing an application.

Overall, the flexibility of a shiny application is the same as the flexibility of general R code. However, because model functions are wrapped within shiny functions, there are some differences in the syntax and flow of computations when compared to basic R scripts. There are online courses that introduce different shiny-specific concepts, while also explaining how to write these functions, and it would help to be familiar with these and use them in conjunction with this section (Shinytutorial?) (DataCamp, 2020). The RStudio tutorial is a good place to learn the basics of working with shiny.

The sub-sections below outline the step-by-step process of building a shiny application. The examples used are freely available in a public GitHub repository, providing full-code examples of the techniques outlined in each sub-section. The steps below can also be used independently of the code examples as a more general guide to the methods and functions used in building a shiny application.

14.3.2 Components of a shiny application

For health economic modelling using R, we recommend developing a basic working model in R before involving shiny. This is for a number of reasons. Firstly, from our experience of developing such applications, the process of quality control (QC) checking and debugging becomes more difficult once the set of R scripts comprising the health economic model have been wrapped in shiny functions application. Secondly, during the development of the health economic model, ideas will typically be generated determining exploration of first order, second order, and structural uncertainties. These will often influence the approach to facilitating and incorporating appropriate analyses, which is easier to implement when the scripts are not already contained within server or ui objects. Thirdly, when designing the ui for a shiny application, the programmer must decide on the level of information available to the end user of the application. For instance, Excel is seen to be transparent due to its high level of perceived verbosity – a user can see all the calculations in the model simply by clicking on the cells. Once a model is functioning, and it is understood which calculations should be presented, an informed decision on how to present the intermediate calculations can be made. Finally, in health economics, code-based models have a reputation of lacking transparency, with the term ‘black box’ being commonplace. However, a well-designed shiny application presenting all relevant information, QC checks, intermediate calculation steps and outputs can achieve a high level of transparency. Writing the initial core of the model in a neat, annotated, and transparent way is more easily achieved without shiny. The programmer can then subsequently focus on achieving the same level of transparency within the shiny framework.

There are many packages that assist with producing aesthetically pleasing outputs that can be highly informative to the user and aid transparency within an R code, which can then be directly used into the ui as an output item. For example, the DT package produces neat tables that are easily customized. Some features include static columns, scrolling, search boxes, paging, and ordering by clicking on columns. DT tables can be used to present data independently of shiny applications, allowing the programmer to visualize the outputs of the code before then adding to the ui (Xie et al., 2021).

ggplot2 is another fundamental package for displaying model outcomes visually. This package can produce a wide variety of appealing and informative graphs that can be used by the programmer at the design stage of the application before wrapping in shiny functions. It is also worth noting that the ggplot2 functions can be used with user-amendable switches (e.g. to toggle a data overlay), sliding scales (e.g. amendable axes limits), and a floating window that provides x and y coordinates (the latter being a feature of shiny itself) to create dynamic and interactive plots. Before including these features, we recommend considering the level of interactivity needed in the application when deciding on the desired graphical output (Wickham, 2016) (RStudio, 2020a).

While the incorporation of shiny after development of the core scripts may lead to changes in functionality and calculations, we recommend keeping the original separate model script up to date. This allows for:

- Easier late-stage QC checking

- Cross-checking the

Rscript with theshinyapplication to ensure they produce the same results.

The second point is very important if the shiny application includes multiple layers of reactivity, as it will help the programmer ensure that the correct processes are happening in the correct order and are triggered when the user expects.

An example of a model within an R script without shiny is shown in the ‘1. R code for HIV model (no shiny).R’ file. This script replicates the HIV/AIDS model from Section 2.5 in Briggs et al.(Briggs et al., 2006) Please take the time to go through and broadly understand this code before moving onto the next example, as this code is adapted throughout all the subsequent code examples. The code is organized with all of the user-defined functions stated and commented at the top of the code, before splitting the model code up into sections. Because R code is linear, this can make it very easy to perform QC checks and trace through.

Users running this code will see that the R script includes data tables and graphs to inform the outputs and also QC messaging. The intermediate calculations and results were written so that they can be directly wrapped by shiny functions; any result that is used as an input to another function, or is to be displayed in a graph or table, is returned to the top level. Additionally, the input object is produced as a list, which allows the programmer to identify the inputs that they may want to have as user amendable in the front-end of the code.

When designing models, it is a good idea to ensure that there is a central list where inputs can be gathered and then used as a single list to inform all of the functions and the production of the results in the model. This has been done in this example in the parameters list. Arranging inputs in this way is especially important in a shiny application because, like an Excel model, there is the option for the model to instantaneously update and be reactive. Setting these reactive dependencies is an important part of designing a shiny application, so having a central list to allow a break to control the level of reactivity is recommended. This will be explored in Code Example 3 onward.

The script below shows the parameters list within the model code. This indexes all inputs into sub-lists and all information that the model is dependent on is passed through this list to the model engine functions.

14.3.3 Designing the user interface

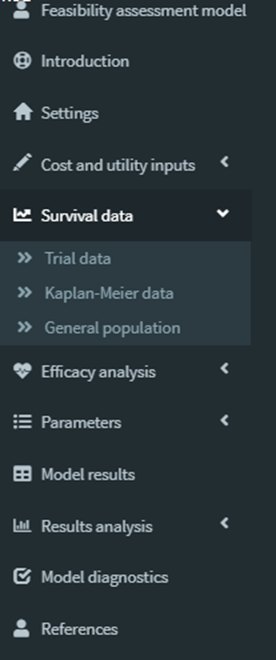

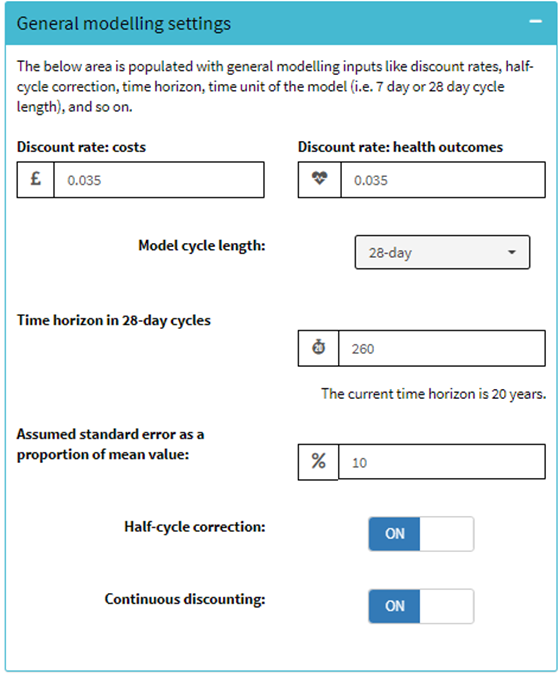

The UI is the part of the application that faces the user. The ui object is the layout of the inputs and outputs in the application, as well as any additional text to be included in the web page. One of the packages that assists programmers with layout design is shinydashboard.(Chang and Borges Ribeiro, 2018) This package allows programmers to produce and customize an application header with a navigation bar to specified tabs.

The design of the UI can also be directed using CSS. We recommend looking at the examples (RStudio Shiny gallery(RStudio, 2020a)) and experimenting with customization (shinydashboard – Appearance page(RStudioshinydashboard, 2020)) to develop a UI design before programming the application. As CSS and HTML are the standard languages of internet web pages, there are a vast number of resources available on the customization of layout, themes, and aesthetics.

Once the look and feel of the ui has been determined, then the layout of the application is a sensible next step. As with Excel, when formulating the layout and types of information presented within the application, the audience must be considered. There may be multiple audiences with different needs – such as company stakeholders, evidence review groups or HTA reviewers, and local payers – wishing to interact with your application, and you will need to consider all of these. Once a desirable layout has been implemented, it is important to consider the options being provided to the user and their compatibility with the server.

An additional consideration when designing the ui script is how the user or developer of the application will be able to perform a QC check on its functionality. Examples of this include ensuring that there are numerous intermediate calculations presented so the user can see how the values flow through the model, and presenting tables with the results of appropriate application tests. We advise using the rmarkdown package to generate reproducible reports of the model functionality, QC checks, and results.(Allaire et al., 2021) (Allaire et al., 2021) (Allaire et al., 2021) rmarkdown can be used from within a shiny server, meaning that such reports can be created by the user with a simple button press within the UI.

Code Example 2

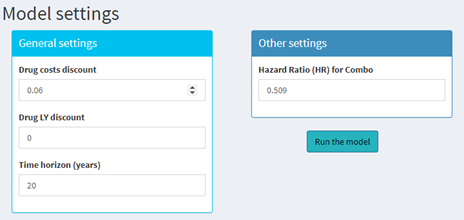

An example of a model UI within a shiny application is in the ‘2. Shiny UI layout.R’ file. This application uses shinydashboard functions to create a simple model layout with a header bar and navigation panel. The navigation panel is ordered according to the different inputs, information and outputs in the model, with icons added to represent each tab.(RStudio, 2020b) The control widgets that make up the inputs are inside boxes that are coloured according to status.(RStudio, 2020c) The overall design of the page is influenced by the shinydashboard appearance guidance for a clear but versatile look.(Chang and Borges Ribeiro, 2018)

Tables of the input clinical data and state and drugs costs are presented in the tabs using the functions from the DT package.(Xie et al., 2021) Placeholders have been added by text to plan the layout of results tables and graphs. The ‘Test outputs’ box is for the user to view the active values in the server; this way the user can see that the interaction with the selected inputs (in this case the Time horizon and the Combo arm hazard ratio) is read by the server, which in turn is rendered as a text output. This is in preparation for future code versions, where the inputs are read by the server and then passed through to the required functions.

Looking at the code itself, the ui and server are both presented in the app.R file. The shinydashboard functions dashboardPage, dashboardSidebar and dashboardBody are appropriately spaced and commented, allowing easy viewing for the programmer and reviewer. A similar approach is used for defining the input and output lists in the ui and rendering the outputs in the server function. Sections are used in the code to aid navigation.

Although this application is simple and does not have any functionality in the server (aside from presenting tables) the code is over 400 lines. Because the application code is read as two large functions, as opposed to multiple small functions in Code Example 1, navigation and commenting are all the more important in shiny applications because it is not possible to run the individual code increments. Therefore, it is recommended that good sectioning and commenting code be used throughout the application to assist both the developer and reviewer.

The following code is an example of how the ui code can be presented; it creates the two input boxes within ‘Model settings’ tab. The code is followed by the output viewed in the model UI.

dashboardBody(

tabItems(

tabItem(

# ~~ Model settings --------------------------------------------------------

tabName = "tab_Settings",

tags$h2("Model settings"),

fluidRow( # `R` Shiny normally renders vertically. fluidrow allows horizontal layering

column( # Columns within fluidrow. the width of the whole page is always 12

4,

box(

title = "General settings",

width = 12, # This '12' refers to the whole space within the column

solidHeader = TRUE, # Whether you want a solid colour border

collapsible = TRUE, # Collapsible box

status = "info", # This is the reference to the colour of the box

numericInput(

inputId = "Dr_C",

label = "Drug costs discount",

value = 0.06,

max = 1,

min = 0,

step = 0.01

),

numericInput(

inputId = "Dr_Ly",

label = "Drug LY discount",

value = 0,

max = 1,

min = 0,

step = 0.01

),

numericInput(

inputId = "THorizon",

label = "Time horizon (years)",

value = 20,

max = 100,

min = 10,

step = 1

)

)

),

column(

4,

box(

title = "Other settings",

width = 12,

solidHeader = TRUE,

collapsible = FALSE,

status = "primary",

numericInput(

inputId = "combo_HR",

label = "Hazard Ratio (HR) for Combo",

value = 0.509,

max = 2,

min = 0.001,

step = 0.001

)

)

),

## Code continuesCode output viewed in UI:

14.3.4 observe and reactive

shiny server objects require the input list object. As shown in Figure @ref(fig:shiny-model-struc)), the object input originates from the ui object, which feeds into the input argument of the server function. The information from input list is then fed into the functions contained within server. Once those calculations are complete, the server populates the output list, which then feeds back into the ui object to be displayed. This process occurs continuously while a shiny application is active. As described earlier, the recalculation of objects within the server is referred to within shiny as reactivity. An object that reacts (i.e. recalculates in response) to external stimuli is referred to as a reactive object.

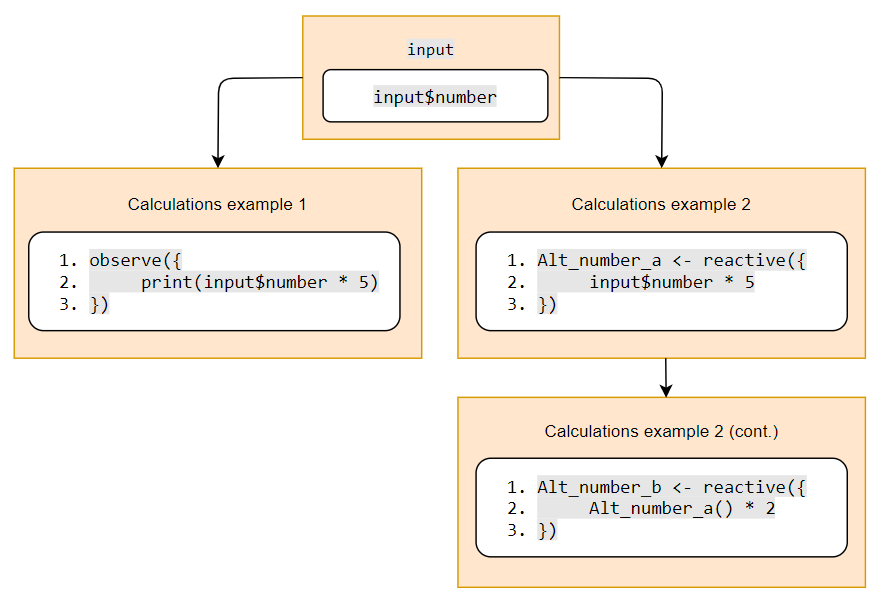

shiny application structureThe two simplest reactive objects are observe and reactive. observe and reactive are triggered for recalculation whenever there is any change whatsoever in the object inputs, each with a different purpose. observe executes a set of actions, whereas reactive produces an object. The difference between the two is illustrated in Figure @ref(fig:shiny-model-obsreac)).

observe and reactiveIn this example, input$number is used by observe to multiply the input number by 5. It does not create an object, and in order to see the results in the R console, print must be used. Whereas, reactive is used to generate an object Alt_number_a, which can then be used in further calculations. In this example, Alt_number_a is included in another reactive calculation, creating the object Alt_number_b.

When writing the set of calculations to be performed in a shiny health economic model, we advise that this process is broken down into a chain of manageable chunks, rather than a few monolithic reactive calculations. This is because observe can be used to print some intermediate calculation results to the console, whereas no reliable QC process can be applied to large blocks of code which cannot be interacted with. We also recommend using reactive to generate objects for the output list during the process, as these can easily be incorporated into the UI to improve transparency and ease or reduce the QC process. An additional benefit of splitting up code and presenting numerous intermediate steps is that the reactivity of the model (i.e. how much and to what extent the model is recalculating) can be more easily monitored to maintain the responsiveness and efficiency of the calculations.

In the case of Figure @ref(fig:shiny-model-obsreac), every time input$number is changed in the script, the observe and reactive approaches will both recalculate. input$number * 5 will be calculated in both expressions, the results will be printed to the console in the case of the observe statement, and the objects Alt_number_a and Alt_number_b will be generated from reactive.

On the ui side, if an output containing the value Alt_number_a() is created, this will change too if input$number is changed. Limiting the level of reactivity is an important consideration for keeping the model run times low and to control when particular functions are executed, and we address how to deal with this issue in the next section.

Both observe and reactive are useful for performing model calculations while designing the model functionality to link the input and output objects. Provided that the design stage has been thorough, there should be minimal difference between the design code and the application code, except for the shiny wrapping functions and the separation into ui and server objects.

Code Example 3

This code brings together the functionality design from Code Example 1 and the ui design in Code Example 2. This code uses the observe and reactive functions to link together the input list from the ui to the functions in the server to produce the output list. Producing the model graphs and tables in Code Example 1 improves the efficiency of this process; you will notice as you go through the Code Example 3 that there are significant similarities to the layout and structure of Code Example 1.

This application is also laid out in three separate scripts: app.R, server.R and ui.R. This naming format allows the scripts to be identified by R as an application, and allows the runApp() function to be used along with its associated arguments for activating the app.

This code is in the ‘3. Shiny app with observe() and reactive()’ file. It uses two different types of intermediate calculation reporting to demonstrate how each can be used: printing to the UI, and printing to the console. Experimenting with changing the input values in the ui and observing the reaction within the ui text outputs and console messages will allow the user to view the active values in the server and the reactivity of the model.

Because of the length of the code, segments are presented in the examples below. The settings inputs are not changed from Code Example 2, but are now found within the ui.R script. Drug unit costs are not changed from Code Example 2, but are now rendered within the server.R script. drug_costs is now wrapped in reactive() as it is an object that is dependent on input$THorizon and needs to be subsequently used in downstream objects and functions, such as the parameters list.

drug_costs <- reactive({

list(

AZT_mono = rep(Drug_unit_costs$AZT_mono,input$THorizon),

lamivudine = c(rep(Drug_unit_costs$lamivudine,2),rep(0,input$THorizon - 2)),

combo = rep(Drug_unit_costs$AZT_mono,input$THorizon) + c(rep(Drug_unit_costs$lamivudine,2),rep(0,input$THorizon - 2))

)

})The parameters list is also wrapped in reactive() as it is dependent on other reactive objects and input list objects. It is also used to display in tables and in the functions to run the model. Note that drug_costs is referenced as a function in this code because it is now a reactive object.

parameters <- reactive({

list(

# ~~ Model settings --------------------------------------------------------

#These are laid out as an embedded list to show off what you can do with a list

settings = list(

#state names

state_names = state_names,

#Discount rate: costs

Dr_C = input$Dr_C,

#Discount rate: life years

Dr_Ly = input$Dr_Ly,

#Time horizon

THorizon = input$THorizon,

#starting distribution of states

state_BL_dist = c(1, 0, 0, 0)

),

# ~ Transition probability matrices (TPMs) ----------------

…, Transition probability matrices not presented for this illustrative example

# ~ Costs --------------------------

Costs = list(

DM_CC_costs = data.frame(

unlist(DM_CC_costs$state_A),

unlist(DM_CC_costs$state_B),

unlist(DM_CC_costs$state_C),

unlist(DM_CC_costs$state_D)

),

drug_costs = drug_costs(),

state_costs = state_costs()

))

})14.3.5 observeEvent and eventReactive

observeEvent and eventReactive are similar to observe and reactive, except that instead of reacting to any change in the input list, they are only reactive to specific inputs at the discretion of the programmer. This way, the reactivity of the model can be controlled, and calculations only performed when required. Event-based reactivity is analogous to VBA macros within Excel-based health economic models, being triggered by actions like buttons, switches, or drop-down menu list selections. Like such VBA macros, it is important that the reactivity is intuitive to the user and that the user is informed of the flow of information throughout the process. Again, it is important to consider the appropriate use of reactivity, as this can provide some control of calculation flow and considerably affect the computational performance of the model:

-

Functionality – If there are numerous interrelated steps depending upon a single event (e.g. a button pressed to recalculate the cost-effectiveness model), then there is likely to be a desired order of calculations. Skipping steps in this ordering could mean that non-existent or superseded values are used in calculations without any visibility to the user. In cost-effectiveness modelling, we recommend using

eventReactiveobjects so that the programmer has full control over the order in which the model computes -

Speed – Too much reactivity can considerably slow down a cost-effectiveness model, due to the number of unnecessary computations. However, if there is too little reactivity (e.g. the user having to sequentially push a series of buttons to perform a calculation correctly), then the model can be confusing or even unusable, especially if the dependencies are obscure and the model is not laid out intuitively

One technique to improve the process of writing shiny applications and controlling reactivity is knowing the point at which reactive objects are created. This is particularly important during model start-up, where non-existent or superseded objects can easily feed into a calculation and cause an error. Figure @ref(fig:shiny-model-strtup) illustrates this problem. In this model, there are three inputs defined in the input list in the ui: a numericInput, a selectInput and an actionButton. There are also two observeEvent objects in the server calculations, each printing a message. When the model starts, the message associated with the observeEvent dependent on the numericInput will appear in the console, but the observeEvent dependent on the actionButton will not. Events dependent on an actionButton, either using observeEvent or eventReactive, are not available on start-up, but most other input types such as numericInput and selectInput are. Therefore, in a model where there are numerous reactive elements dependent on a numericInput, these will exist without producing errors. However, if the input to a function depends on an input that is not available on start-up, such as one produced by an actionButton, then these calculations will either not execute at all, or they will execute in ways not intended by the programmer. It is therefore important during development to test the reactivity by printing to console and to the ui, to ensure that the required values exist and are reactive in the way that is expected. There are methods to control how the values go through the model and activate when planned, and these are explained in the next section.

One way for the programmer to plan out reactivity of their model – and to be able to effectively communicate reactivity to users and reviewers – is to produce a flow diagram, such as the one presented in Figure @ref(fig:shiny-model-reacflow). In this example, the input objects are interacted with by the user, and these feed via reactive (indicated by the dashed line) into the parameters object. Hard-coded values are also used in the parameters object and are presented in tables in the output list for the user to view, but these elements are not reactive (as indicated by the solid lines). The parameters list is then fed into functions that run the model and produce objects in the output list, but steps concerning the running of the model and the subsequent generation of results in the output list is controlled by eventReactive, and is dependent upon the user interaction with input$Run_model. Diagrams of the reactive pathways and dependencies within an application can be presented within applications as images, or exported from applications as part of a user guide.

Code Example 4

In this example, the model uses observe and reactive until the point of the parameters, then uses observeEvent and eventReactive after the parameters. The observeEvent and eventReactive objects are dependent on a button called input$Run_model. In this setup, the inputs are always reactive but are not used to run the model until input$Run_model is activated. In opening the application and interacting with the inputs, users will notice that the parameters sheet is populated with the live values, and the console prints the parameters whenever the inputs are interacted with. However, the results do not render until the ‘Run the model’ button is selected.

Because of the length of the code, segments are presented in the examples below. The setting inputs are not changed from Code Example 3, with the exception that they have an action button added under input$combo_HR, called input$Run_model

dashboardBody(

tabItems( #This included the list of all the tabs

tabItem( #This is where a single tab is defined (model settings)

# ~~ Model settings --------------------------------------------------------

tabName = "tab_Settings", # Tab ID

tags$h2("Model settings"),

fluidRow( # fluidrow allows horizontal layering

…, General settings box not presented for this illustrative example

column(

4,

box(

title = "Other settings",

width = 12,

solidHeader = TRUE,

collapsible = FALSE,

status = "primary",

numericInput(

inputId = "combo_HR",

label = "Hazard Ratio (HR) for Combo",

value = 0.509,

max = 2,

min = 0.001,

step = 0.001

)

),

column( # Not all outputs need to be in boxes

8,

offset = 3,

actionButton(inputId = "Run_model", label = "Run the model", style = "color: #000000; background-color: #26b3bd; border-color: #2e6da4")

)

),

… Test outputs not presented for this illustrative example

))Rendered output:

This model version uses reactive until the parameters list is created; because of this, the drug cost calculations and the parameters list are produced using in the same way as was shown in Code Example 3. The parameters list is then used only when input$Run_model is selected. An example of this is when the patient flow values are created; patient_flow is created as a list, patient_flow$disc is created as a sub-list to identify the discounting values to be used. patient_flow$disc$cost uses the time horizon and the annual discount from the parameters sheet to calculate the vector of discounting used at each cycle; it is only created when the input$Run_model button is activated.

patient_flow$disc$cost <- eventReactive(input$Run_model, {

sapply(1:parameters()$settings$THorizon, function(n)

{1 / ((1 + parameters()$settings$Dr_C) ^ n)})

}) 14.3.6 rhandsontable and conditionalPanel

There are many functions that can be used to enhance shiny applications, both within shiny and in other packages. Two of these functions are conditionalPanel and rhandsontable. Code Example 5 focuses on the use of these.

conditionalPanel is a simple way within shiny to make ui sections appear and disappear according to the settings of a particular object in the input list. This is useful if there are certain settings or pages that are only applicable when a particular setting is active.

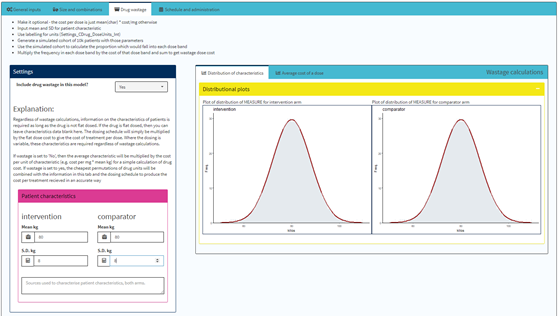

The limitation of typical shiny inputs is that they are mostly only appropriate for inputting single values or settings. The functions within the rhandsontable package allow a table to be used as an input, similarly to Excel. rhandsontable is a JavaScript function adapted for use in R, so it can be flexible to changes including colour, number formats and also validation (restrictions to values, conditional formatting, etc.). It is also possible to add radio buttons, drop-down menus, heatmaps and rendering results; examples of this are available online. (Owen, 2022) Using rhandsontable is a good way of creating an Excel-like feel while also allowing much of the input data to be contained within one input. rhandsontable objects when used as inputs can trigger event-dependent reactive objects, meaning that changing one value in a table can trigger the associated chain of recalculations (just like in Excel).

Because rhandsontable objects exist both in the input list and the output list at the same time, they require some special treatment. rhandsontable objects are defined as outputs in server, rather than as inputs in ui (Figure @ref(fig:shiny-model-rhandsreacflow)). Inputs defined in the ui are available upon model start-up and can be used immediately in functions. However, inputs defined as outputs in the server are not available upon model start-up, and do not automatically ‘exist’ until the page and tab with the output object – in this case, the rhandsontable – is rendered. This is a problem, because reactive functions run on start-up; therefore, if a rhandsontable object is used as an input to inform a reactive or observe function, there will be errors when the model starts as the rhandsontable input will not exist at the time of first execution of the reactive or observe functions. There are a few things that can be done to solve this:

-

shinyhas a functionreq, short for ‘require’, which prevents computation of areactiveorobserveobject until after the arguments withinreqexist. For instance,req(input$number)within anobservewill never compute until the objectinput$numberexists, after which it will act as normal. This command is invaluable in preventing shiny applications from breaking when trying to compute using non-existent objects, as it will prevent the application from computing the value until the input exists. -

Alternatively,

is.nullcan also be used to test whether the input exists. If it does, then the input can be used, and if not, then the base case data informing the input can be used. Usingis.nullis recommended where the input is used to create multiple downstream processes that are required when the model starts up. In this instance, delaying the computation of the function relying on the input using req would not be appropriate as the object created by the input would then not exist, causing error in chain of functionality. -

If using

reactive, this can be changed toeventReactiveand made to be dependent on a button that would only be selected after the input exists. If this option is preferred but needs to be used on model start-up, then theclickfunction can be used to activate the button at start up – provided there is anis.nullcheck.

The function outputOptions can be used to force existence, even when a ui page has not rendered yet. However, even if this function is used within the code, the input does not automatically exist on starting the model. Therefore, when building reactivity into a shiny-based cost-effectiveness model, we recommend that req is used extensively to ensure that objects exist before they are used in a calculation, or is.null if the object has downstream dependencies. This is particularly important if the model has numerous pages without an obvious ordering, where it is possible that the model may be run without the inputs in question ever being rendered.

Figure @ref(fig:shiny-model-rhandsreacflow) shows an example of how reactivity in a shiny model could be ordered if a rhandsontable was used to inform inputs. The reactivity would be similar to that presented in Figure @ref(fig:shiny-model-reacflow); however, the hard-coded values do not inform the parameters. Instead, they inform the rhandsontable in the output list, which is then pushed to the input list via the hot_to_r function activated by the input$Cost_update button in an eventReactive. Because this object was required on start-up, a click function was also used that was inside an observe object and was activated if(input$Cost_update == 0). This was the third option on the above list of potential solutions.

Of note, using this method means that an eventReactive object is now upstream of an object produced by reactive, the parameters list. Therefore, to ensure that all the required inputs are available on start-up when the reactive objects are initially made, an is.null is used around the objects that are directly dependent on the rhandsontable, and the hard-coded values inform the input if the rhandsontable does not exist. req would not be suitable here because the rhandsontable is used to produce downstream objects.

An alternative approach would be to have the parameters list also dependent on input$Cost_update; however, using this approach would mean that the input$Cost_update button would need to be selected before clicking input$Run_model, as otherwise the parameters would not have been produced prior to running the model. Having the parameters available on start-up and using is.null requires additional consideration for reactivity, but means that the user will not have to navigate through the model in a particular order.

Code Example 5

This code has the same reactivity as Code Example 4. Before the ‘Run the model’ button has been selected, the ‘Model results’ page now displays a conditional panel informing the user that the button needs to be selected for the model to run. This is controlled by the conditionalPanel function in the ui.R script. Before buttons are selected, they have a value of 0, this increases by 1 each time the button is pressed; once the input$Run_model button is selected it will have a value of > 0, so the results render.

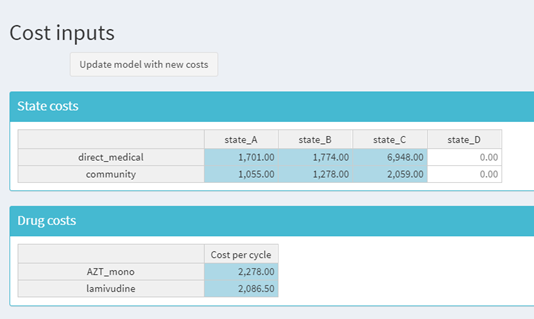

rhandsontable has been used in the ‘Cost inputs’ page to create tables that are user-amendable, unlike the previous versions. Cells which can be interacted with are coloured blue. For the State costs table, State_D is death and in the existing functions there was not the ability for costs to be added after death; as with all models, it is important to only allow user amendable options where appropriate. The tables are defined in the server and are programmed to only be amended with numeric values between 0 and 20000.

Within the server script, the two intermediate objects DM_CC_cost_table_react and Drug_cost_table are eventReactive, dependent on the button to update costs. If the tables have not been rendered then these objects are equal to the base case tables, therefore, the user does not have to visit this page and render this page for the model to work. You will notice however, that this function is dependent on the input$Cost_update button being selected, and there is no guidance on users needing to select this button before running the model. This is because at the bottom of the server script (under the section heading ‘Initial cost setup’) there is the instruction that if the button value is 0, then the button is to be clicked (using the click function). Because the button is activated inside the code, the cost objects will exist when the model starts. This use of the click function could also be used to run the model on model start-up.

The inputs in the ui are not different from the previous code examples, however, conditionalPanel is now used so that the ‘Model results’ page does not render until after input$Run_model is pressed at least once.

tabItem(

tabName = "tab_Model_res",

h2("Model results"),

conditionalPanel("input.Run_model > 0",

# Conditional panels are used to render the UI depending on

# particular values in the inputs, including action buttons

# This "input.Run_model > 0" is only true if input$Run_model

# has been pressed

fluidRow(

box(

title = "Model summary",

width = 12,

solidHeader = TRUE,

status = "primary",

tags$u("Discounted results:"),

dataTableOutput(outputId = "disc_Results_table"),

br(),

tags$u("Undiscounted results:"),

dataTableOutput(outputId = "undisc_Results_table")

),

box(

title = "Model graphs",

width = 12,

solidHeader = TRUE,

status = "primary",

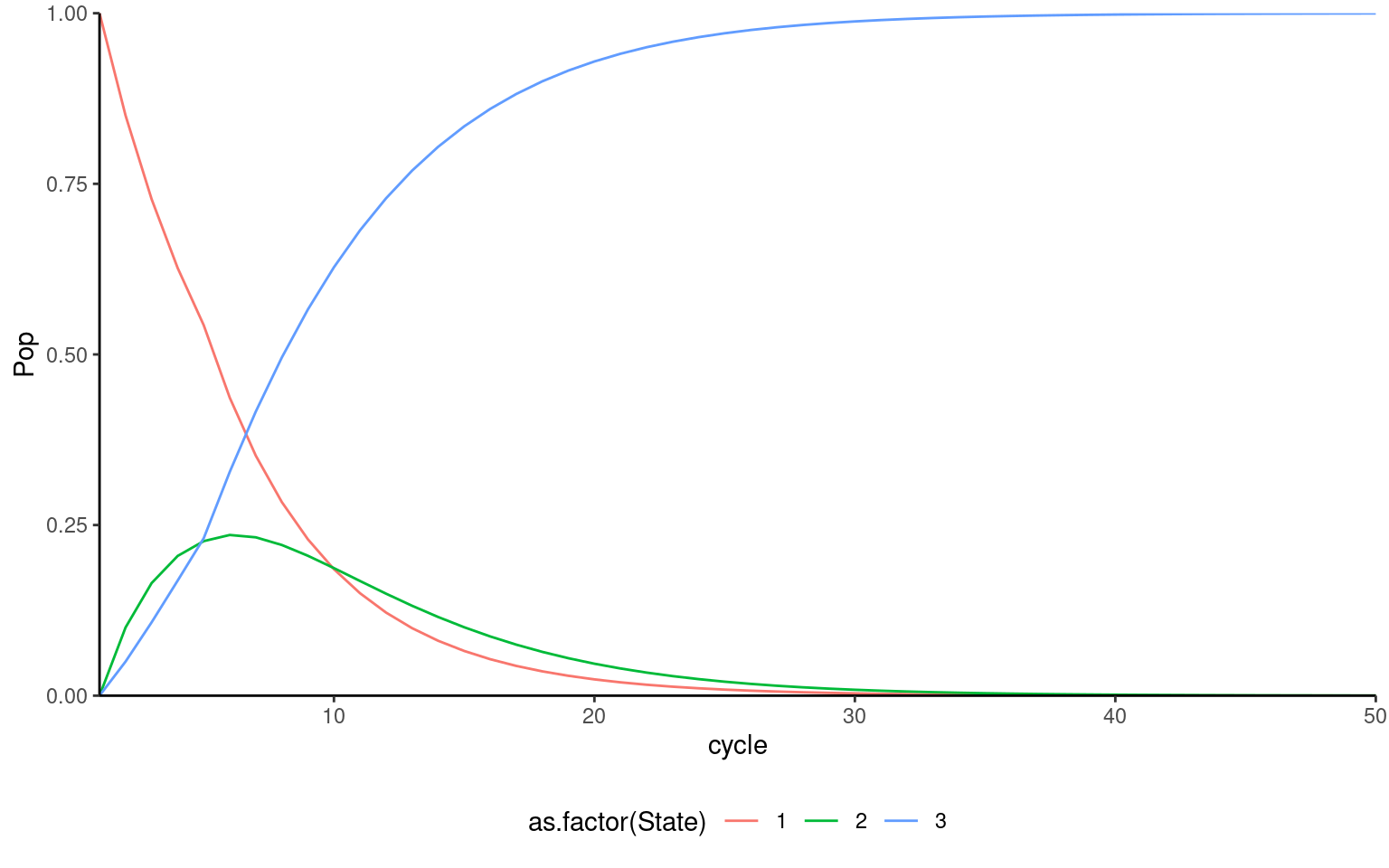

tags$u("Monotherapy trace:"),

plotOutput(outputId = "mono_trace"),

br(),

tags$u("Combination trace:"),

plotOutput(outputId = "combo_trace"),

br(),

tags$u("Comparative trace:"),

plotOutput(outputId = "comparative_markov")

)

)),

conditionalPanel(

"input.Run_model == 0",

# If Run_model has not been selected

tags$h3("Select 'Run the model' button in Model settings to run the model")

)

)The rhandsontable objects are rendered as an output table in the server similar to as if DT had been used. The cells have been coloured blue in this example to indicate that the cells are user-amendable, and a button has been added to convert the output tables to input values as described in Figure @ref(fig:shiny-model-rhandsreacflow).

The drug_costs object is now dependent on Drug_cost_table, which is an eventReactive object that contains is.null to ensure that if the rhandsontable has not been rendered then the base case values will be used. The eventReactive is triggered on start-up by using the click function dependent on the input$Cost_update not having been clicked previously.

Drug_cost_table <- eventReactive(input$Cost_update,{

if(is.null(input$Drug_costs_table)) {

as.data.frame.array(rbind(2278,2086.50), stringsAsFactors = F)

} else {

as.data.frame.array(hot_to_r(input$Drug_costs_table), stringsAsFactors = F)

}

})

# ~~ Intermediate cost calculations ---------------------------------------------

drug_costs <- reactive({

list(

AZT_mono = rep(Drug_cost_table()[1,1],input$THorizon),

lamivudine = c(rep(Drug_cost_table()[2,1],2),rep(0,input$THorizon - 2)),

combo = rep(Drug_cost_table()[1,1],input$THorizon) + c(rep(Drug_cost_table()[2,1],2),rep(0,input$THorizon - 2))

)

})

(bottom of script)

# ~ Initial cost setup --------------------------------------------------------------

observe(if (input$Cost_update == 0){

click("Cost_update")

})14.3.7 uiOutput and modules

Sometimes, the value of an input object needs to be reactive and dependent on the value of another input at the same time. For instance, if a certain value from a drop-down menu is selected, then this may be used to populate the options of another drop-down menu or populate numerical inputs to a list of values. In health economic modelling, this is a common need. For example, when switching comparators from an intravenous comparator to an oral one, the relevant options for dosing could change considerably.

This can be achieved by using uiOutput – a placeholder in the ui script for reactive ui elements. The ui object is created inside server using renderUI. uiOutput can refer to single inputs or entire blocks of interface, provided that the content is wrapped within a single block within the ui (for example, tagList and fluidRow are both used in Code Example 6). renderUI can be wrapped inside observeEvent or observe, meaning that it can simultaneously respond to a change in a model input and present the results of a reactive calculation.

Another useful way to control large sections of reactive ui or functionality is to wrap code within modules. This is where sections of the model server and ui are both written as a separate functions, which can then be added or switched between depending on the requirements of the application. An example would be where there are multiple different methods that are each used independently of one other and activated via a switch. Because these can be kept as entirely separate scripts that can be added to models with minimal changes (provided an underlying structure is present), modules are particularly useful if code would be expected to be recycled between models. Because modules are isolated blocks of code, the same input or output objects can be defined and used across multiple modules, and modules can be used interchangeably in this scenario – provided that modules with the same input or output objects are never active simultaneously. Multiple module scripts can be developed and validated, then kept in a library to allow consistent use between projects.

The drawback of using modules is that, as with all functions, objects defined inside modules cannot be used to inform any reference outside the module; this is the case for all input objects. However, like a function, return can be used to output necessary values that can be used elsewhere and referenced in the same way as a reactive object.

As with all objects defined in the server, it is important to consider whether the uiOutput or a module is required at model start-up, and whether or not they exist at the time of use. Any values required from these values on model start-up will require checks using req, is.null, controlled reactivity, or a combination of all three, to ensure that the model does not fail when starting up.

Code Example 6

Looking through the ui.R script, both the ‘Model settings’ and the ‘Model results’ pages no longer have any content aside from the title, a ‘reset settings to base case’ button, and the uiOutput object. Within the server, two additional sections have been added to render both of these pages as output objects. The inputs that are defined within the renderUI object can still be referenced using the input$xxx syntax the same way that an input object defined within the ui.R script is, however there is a difference in that these inputs are defined in the server as output objects and are therefore not available immediately upon start-up. Because of this, all objects referring to these inputs (e.g. input$THorizon and input$combo_HR) that are within reactive or observe functions include an is.null statement which refers to the base case values during start-up. To view this, at the bottom of server.R is an observe statement with a test for whether input$THorizon is null. On start-up the console initially states that input$THorizon does not exist, input$THorizon then renders on the ‘Model settings’ page, then prints that it does exist after the start-up calculations are complete. A second test looks at the existence of input$Cost_update, which is an input on the ‘Cost inputs’ page; the test shows that at start-up this input object exists, even though the page has not been rendered, because this is defined in the ui.R.

There are functions, such as outputOptions, which can make output objects (including uiOutputs) exist before pages render; however these still do not allow the inputs to exist on start-up, so the model will always produce errors if there is a dependency by a reactive object, therefore is.null or req should always be used in these circumstances. Despite these considerations, uiOutputs are a great way of adding flexibility to the model and adapting the input values according to switches or ‘reset buttons’, the latter of which is demonstrated inside this code by using an observeEvent to wrap the renderUI (and click at the bottom of the script to render the ui at start-up).

Within the ui, all of the previous content has been converted to uiOutput("Model_settings_UI") so that they can be re-rendered if the input$Model_settings_reset_button is clicked. Having the settings as uiOutput objects in the server also means that they can be changed dependent on other settings, for instance if there are multiple base cases or adaptations.

tabItems(

tabItem(

# ~~ Model settings --------------------------------------------------------

tabName = "tab_Settings",

tags$h2("Model settings"),

actionButton(

"Model_settings_reset_button",

"Reset settings to base case",

style = "color: #fff; background-color: #bd3598; border-color: #2e6da4"

),

br(), br(),

uiOutput("Model_settings_UI")

)Also within the ui, the conditionalPanel has been moved and replaced with uiOutput("Model_results_UI") which is rendered inside the server

# ~~ Model results --------------------------------------------------------

tabItem(

tabName = "tab_Model_res",

h2("Model results"),

uiOutput("Model_results_UI")

)In the server, the uiOutput is rendered using renderUI. The same syntax is used to create a conditionalPanel, however, the results themselves are rendered inside a module called Results_UI, with the name “ResultsUI”. This demonstrates the flexibility of deriving ui according to the requirements of the model.

output$Model_results_UI <- renderUI({

tagList(

conditionalPanel("input.Run_model > 0", # Conditional panels are used to render the UI depending on

# particular values in the inputs, including action buttons

# This "input.Run_model > 0" is only true if "input.Run_model"

# has been pressed

# Refer to the module UI here, the id of the UI is put inside the brackets. The name can be anything

Results_UI("ResultsUI")),

conditionalPanel(

"input.Run_model == 0",

# If Run_model has not been selected

tags$h3("Select 'Run the model' button in Model settings to run the model")

)

)

})While these step-by-step points for developing applications should form the foundation of application planning and development, there are additional practicalities that should be considered that are independent of the code itself. These practicalities are introduced in Section @ref(ShinyPract).

14.3.8 Using Reactivevalues lists to centrally manage a shiny app

As the number of uniquely named reactive objects increases, so does the difficulty associated with managing the data flowing through an application. This becomes more obvious when introducing the idea of UI elements containing sets of inputs that move in and out of existence depending on user settings which may or may not exist themselves at any point in time (i.e. nested interdependent UI elements). One way of managing this is to build several independently running modules for different areas of a cost-effectiveness model, using the approaches described in Section @ref(uiModules). This has several advantages in terms of keeping different areas separate and being able to apply namespaces. However, the syntax and cognitive processing involved can make code very difficult to follow, and to convert an existing set of code to use modules can take a considerable amount of time and effort.

An alternative to this approach is to use another type of reactive object, called reactiveValues. A reactiveValues object is essentially a standard R list object (See Sections XXX - Earlier sections in the book about object types), which can house all object types, but exists within the reactive domain. reactiveValues objects are particularly useful because their values can be assigned directly from within an observe or observeEvent. This means that the contents of each reactiveValues element are effectively an expandable and nestable list of reactive objects that do not need to be uniquely named, and do not need defining as reactive or eventReactive. In this sense, one reactiveValues object is like having a data handling module without the additional difficulty of use.

A reactiveValues list, for all intents and purposes, therefore provides much simpler access to a form of namespacing. A particular list containing sub-items has its own name on the top level (e.g. RV for reactiveValues, R for reactive, L for live, D for defaults, and so on), and therefore the items within the list do not need to be uniquely named within the shiny server (e.g. RV$TH could be the time horizon within RV, which could be different to a separate reactive called TH()). When considering a cost-effectiveness model suitable for HTA, one immediately recognises that there may be a requirement for literally thousands of reactive objects to exist to facilitate it in R. This is because a cost-effectiveness model graphical user interface in shiny requires “memory” for elements which may fall out of existence or be refreshed. For instance, in a drug costing framework, one may wish to select how many drugs are included in the model across the various treatment arms, as well as what type of drug each drug is. Each ‘type’ of drug has a different set of required inputs to model cost over time. For instance, flat-dosed treatments do not require information on weight or BSA distribution, whereas IV treatments and banded dosing do. Pill-tracking approaches require another independent set of inputs. Finally, in some more complex situations (e.g. a complex patient access scheme), the user may even want/need to enter cost per cycle directly into an excel-like input area, or even upload an excel file calculating cost over time. As all of these approaches require different sets of inputs, to manually generate them all would result in either a very large and unruly user interface, or a very large and unruly set of R scripts.

This is a common problem that is likely to exist in almost all cost-effectiveness models irrespective of software used to implement them. It is not good practice to simply write out the different user interfaces manually and repetitively, along with large amounts of reactive objects along with isolate commands to let the model ‘remember’ the selections that have been made in the past. Instead, a combination of writing user interface generating code into functions and storing all permutations of data inside of reactiveValues objects provides an easy to use, easy to QC, and almost infinitely expandable platform for building cost-effectiveness models.

The approach we suggest includes a combination of the following four principals:

-

Keep all possible data in a defined set of

reactiveValueslists to allow the Shiny application to “remember” previous selections, and tidy up the flow of data throughout the application. This must include all possible permutations of data in the context of nested and interdependent ui elements (or a mechanism to automatically populate) -

Within the

reactiveValuesobjects there should at least be one that is ‘responsive’, updating instantly when inputs are updated, and another which is ‘live’, updating only when the user commits some changes (i.e. moving the data from the ‘responsive’ object to the ‘live’ object -

Populate all ui elements using the ‘live’ object - this usually requires rendering within

renderUI

In our experience, sticking strictly to these four rules is extremely useful in the context of building cost-effectiveness models in R, when using Shiny as a user interface. It is also an approach which is familiar to cost-effectiveness modelers, in that all data passing through the model passes through a bottleneck which is readable and transparent before being used to calculate results. Finally, one major advantage to this approach is that a model’s state (all inputs and intermediate calculations, and even results) can effectively be ‘saved’ by simply cloning the reactiveValues object describing the current model state (i.e. the reactiveValuesToList() and saveRDS() commands can be used to save an R list containing the state of the model at that time). In the context of interdependent dynamic UIs (See Wickham’s chapter on this here), the ability of shiny to save and load previous states of the application is limited. Shiny uses ‘bookmarking’ to restore the previous state of an application (See Wickham’s chapter on this here). When ui elements are nested and depend on each other, simply restoring the state of objects in the input object is inadequate. For example, if there existed a slider to select a number of drugs included, and a radio button for each drug that currently exists, then restoring the state of the application would restore the slider, which would refresh the buttons, resetting their values to the value they were coded with initially. That is, without a data point to inform the nested elements (which go in and out of existence depending on the higher-level ui elements that control their existence) when they are generated, their values will be ‘forgotten’ by the application upon restoring the application state. This issue is solved using the reactiveValues central data management approach. This is because the ‘live’ object contains data points for all possible permutations of data, irrespective of user selections, and this object never falls out of existence. Thus, it no longer matters if the ui elements are refreshed, their values will be linked to the ‘live’ reactiveValues object. Therefore, simply replacing all of the values in the ‘live’ reactiveValues object will revert the application (as long as the below approach is strictly followed) to a previous state as all data is then the same as it was when the file was saved.

This approach requires some additional groundwork to be laid when making an application, but avoids almost all increases in complication with complication of the application requirements, all whilst preserving transparency and keeping the flow of data ‘tidy’ and under control.

One potential structure for the reactiveValues central data management approach might be:

-

D(i.e. default) -

R(i.e. Reactive) -

L(i.e. Live) -

S(i.e. save)

Where each of the above contain 1st level lists including (for example):

-

basic -

pld -

survival -

drug -

hcru -

utility -

results -

psa -

owsa -

evpi -

etc

Each 1st level list can then contain all information pertaining to that particular theme, and updates to the constituent parts of these objects can be linked to events within the UI of the application (i.e. action buttons to ‘commit’ changes), irrespective of how nested or complex that situation becomes. Equivalently, an entirely different structure can be used to organise model data. However, the above loosely follows the conventional structure which Excel-based cost-effectiveness models follow. For the near future, we expect this to be a structure that health economists in the field worldwide are familiar and comfortable with, so we use it here. The contents of R$basic may be, for instance, R$basic$th (time horizon), R$basic$disc_q (discount rate for QALYs), R$basic$disc_c (Discount rate for costs), and so on, whilst R$pld could house raw and processed/cleaned patient level data (or aggregated analysis results to preserve data security, then requiring a mechanism to load them through), as well as any settings like columns being used for data analysis, covariates, analysis type selections from switches and so on. In other words, keeping all of the information running through the model in one place.

To illustrate further, the list structure of a cost-effectiveness model’s central data object could follow a structure like the one below, where each element below the Root level is itself a list containing multiple objects:

Root

¦--basic

¦--pld

¦ ¦--surv

¦ °--util

¦--survival

¦ ¦--dat

¦ ¦--flexsurv

¦ °--summary

¦--drug

¦ ¦--drug_types

¦ ¦--dosing

¦ ¦--wastage

¦ ¦--cost

¦ ¦--schedule

¦ ¦--subs_tx

¦ ¦--inputs

¦ °--outputs

¦--hcru

¦ ¦--inputs

¦ ¦--schedule

¦ °--outputs

¦--utility

¦ ¦--inputs

¦ ¦--time_to_death

¦ ¦--gpop

¦ ¦--schedule

¦ °--outputs

¦--results

¦ ¦--summary

¦ ¦--detailed

¦ °--ejp

¦--psa

¦ ¦--inputs

¦ ¦--results

¦ °--outputs

¦--owsa

¦ ¦--inputs

¦ ¦--results

¦ °--outputs

°--evpi

¦--inputs

¦--results

°--outputs As can be seen above and in Figure @ref(fig:DRLdiagram), this is intuitive for both those familiar with R and with Excel, and should also be a welcome feature for those that are familiar with cost-effectiveness modeling. Furthermore, as each sub-item in the list structure contains a space for intermediate calculations to be housed, this results in much easier QC and debugging of code.

Example model of controlling data flow in Shiny cost-effectiveness model

In Figure @ref(fig:DRLdiagram), The defaults, D inform live L. Changes to L trigger updates to the UI. Changes to the UI trigger the server, which process and organises the (disorganised) data coming from the UI. The Server now organised information is then passed into R. When D is first inserted into L, once the cleaning process has completed, R should be identical to L, and confirming the changes to update L should trigger nothing. This provides a simple way to test whether the application has started up correctly.

Essentially, D, S, L, and R are complete lists of model parameters, all at different stages. The idea of all parameters passing through one place should be familiar to any Excel-based cost-effectiveness modeller, as a centralised parameters sheet is a very common feature of a typical well-executed Excel-based cost-effectiveness model. The big difference between a parameters sheet within an excel workbook and one of these objects is structure. An R list can contain any number of different types of objects, including live statistical analysis results, numbers, tables, text, Replacing D or L with the saved version S is equivalent to loading a complete previous analysis, meaning that previous analyses can be loaded into the system without closing the application. Note that all of these lists must contain space for every possible permutation of inputs possible in the model, even those that do not exist in the UI at a point in time (for instance if up to 10 drugs can be simulated, the drug input list structure must be laid out for all 10 of them, even if the data elements are just placeholder defaults).

As with initially inserting D into L, when the user changes something in the UI, this triggers actions in the Server which process that information and feed it into R immediately and with a high priority, but does not pass this information into L. At this point in time, R is different to L because the user has not yet committed the changes made to via the UI via a button or some other trigger. When the user commits the changes, the relevant information in R can be placed directly into L. Once L is updated the UI elements that depend on L will update automatically. Their values will correspond to the values of L, whether or not they existed within the UI at the time that L was last updated. This is similar to but more useful than the Shiny command isolate(). This approach can be applied to thousands of data points in one line of code (i.e. L <- R), rather than requiring thousands of individual calls to isolate(). For instance, if the user changes the number of treatment arms from 2 to 3 and commits this change, the potentially thousands of inputs sitting in L for arm #3 will be used to inform all of the inputs for that arm, even if this is the first time that arm was brought into play (and therefore all the inputs for arm #3 didn’t exist at the time). This emphasises the importance of making sure that D contains placeholders for all possible permutations of inputs.

Cost-effectiveness models invariably require a large number of interdependent dynamic renderUI(), especially for the calculation of drug costs, which can follow several different methodologies (e.g. flat dosing, IV medications, dose banding, tracking individual pill consumption). The “DSLR” approach enables a switch to trigger a totally different user interface with different inputs and outputs that are stored and ‘remembered’ separately for any number of independently modeled drugs depending on what type of drug is selected in the UI, without the need for any reactive() or isolate() calls whatsoever.

As discussed above, changes in L immediately change the ui elements directly. Functionally, this works the same way as the updateXXXInput family of commands, but without a need to use them, saving potentially tens of thousands of lines of code. All UI elements are rendered within a renderUI (i.e. server-side) and the value, selected, choices etc arguments for those UI elements refer directly to L, meaning that if L changes, those arguments change, triggering a refresh of those UI elements. However, changing the UI element values should not immediately loop back to L. This is because this would create an infinite loop due to the very small (microseconds) delay between updating a UI element value and the server recognising the change. The user changes a value in the UI, and this triggers the server, updating L, but at the same time the value in L is now different to the value in UI, so the UI is updated to be in line with L whilst the server is still processing the change. Consequently, the server now forever alternates between two values for that UI input. Using R and only updating L via a button or other trigger avoids this. A set of observeEvent calls linked to buttons to populate L using R can be used, whilst another set of observeEvent events update and process R when the UI changes. In short, the process includes the following steps:

-

Outside of the server (typically in global.R): Define

D– the default values.-

For nested or interdependent elements, use

lapplyinside of a function to generate n sets of default inputs, given each selection. This avoids error and keeps the structure ofD,R, andLstandardised -

A more advanced approach could be to generate

nsets of default inputs where n is not the maximumnallowed, and then introduce a trigger (i.e.observeEvent) into the server code which expandsRandLautomatically with default values when the value forninRexceedsninD. However, one must ensure that the values inside ofRare not overwritten with default values when changingn!

-

-

Inside of the server (preferably at the beginning): Define

RandL.-

Due to the nature of the

reactiveValuescommand, one cannot simply enterR <- D. Instead, the 1st level elements need to be passed along. E.g.R <- reactivevalues(Basic = D$Basic). Fortunately, this is just a few lines of code and is quite transparent and easy to read, showing the data being passed along fromDintoRandL. -

Remember if new 1st level elements are added to